The Power of RelevanceAI in Social Research

Meet Christy Arnott, the Director of ASDF Research. With expertise in social research methods, data analysis, and online survey programming, they excel in survey design and developing data management systems. A skilled project manager, they offer strategic insights and planning. Christy’s core values of innovation, accountability, and respect drive their pursuit of best practices, creativity, equity and efficiency. Their commitment to knowledge sharing and sustainability sets them apart in the field.In this special piece, Christy shares knowledge on RelevanceAI, a piece of software using AI to help code written comments. Read on for the benefits of this tool in analysing community feedback, project examples where Christy utilised RelevanceAI, and why not ChatGPT?What is this tool and its benefits?RelevanceAI – https://relevanceai.com/for-market-research

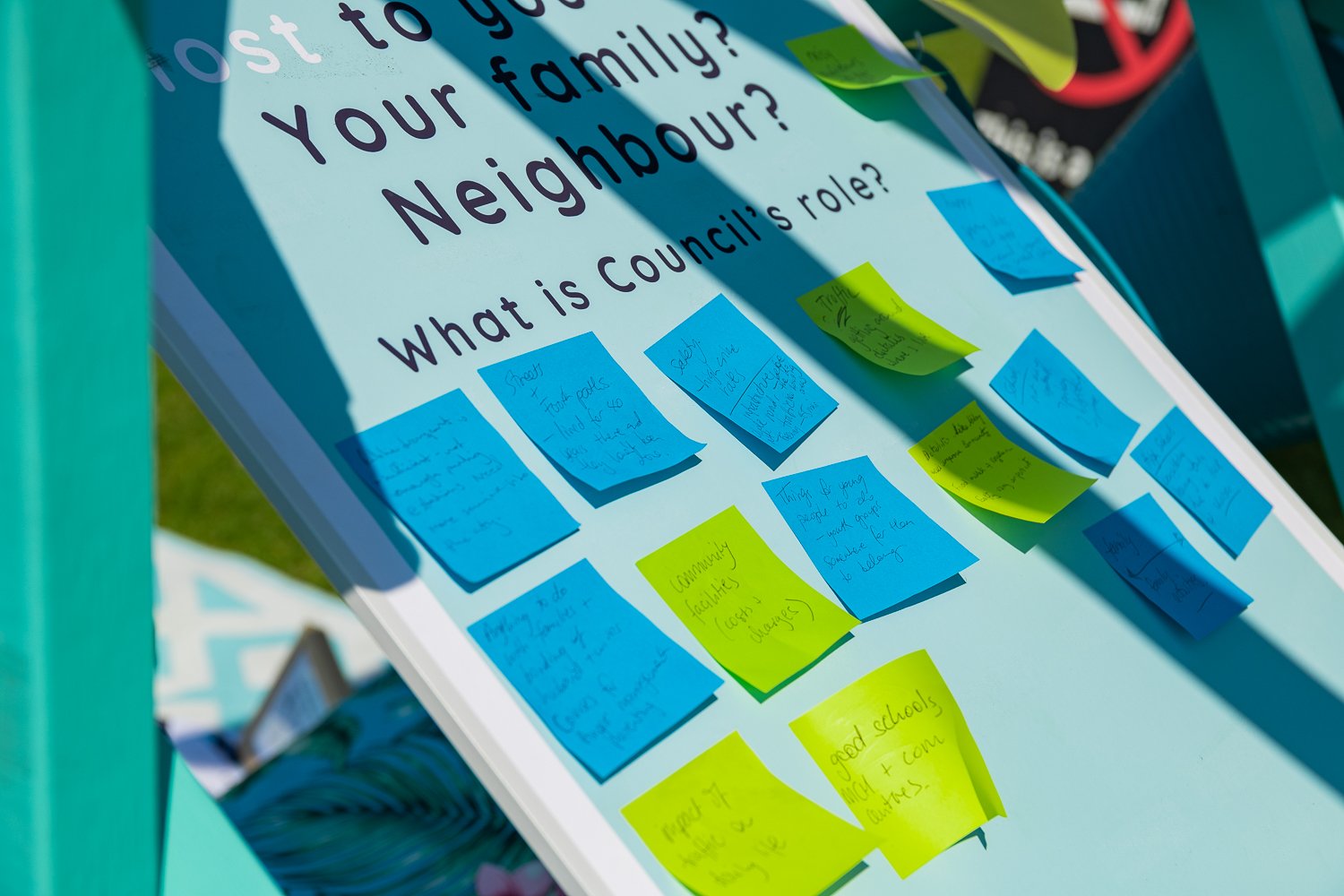

This is a piece of software that uses AI to help you code written comments (thematic analysis). So let’s say we have done a survey with 2,000 responses and there is 1 open-ended question where respondents could write in their answer. We can load in the written comments and use their AI system to generate ‘tags’ to group comments into themes for analysis.

Benefits:

Much faster coding

High level of accuracy

Can still have a very hands-on approach

Benefits of using the tool in community engagement?Note, we use it for social research analysis, not community engagement.

For years, we have been doing our thematic analysis by hand. That is, we list all of the comments in Excel and then read through them all and create the tags/codes as we go. This has been a great process as we can be highly accurate (that is, understand the tone and context, rather than just grouping like words together) but it is very time consuming, typically taking an hour to tag/code around 100 comments. We have been resistant to using software to assist in the past as we had tried a few options and none of them provided tags/codes that we felt adequately considered the nuances in what people had written (that is, the meaning rather than just the words).

With this AI system, however, it is able to interpret the nuances. Also, you have full control over the process: you can revise the tags and then re-run the tagging, as well as go through each comment and see how it has been tagged and update any tags that need to be revised.

You can ask it to assign comments according to a list of tags that you provide, or you can ask it to come up with a recommended set of tags and then refine them as you go.

We never rely solely on the software and will always review the tags. For instance, when we ask it to come up with recommended tags we will look through the suggestions for tags that can be combined (e.g. “walking” and “cycling” into “Active transport”) and tags that can be split up (e.g. “Community engagement on road and train transport” split into “community engagement on road transport” and “community engagement on train transport”). We also check the tags assigned to each comment to make sure they are correct. For instance, one thing we look for is ‘assumed meaning’, where a comment is tagged based on assuming the respondent meant something, whereas not enough information was given to make that assumption (e.g. comment “graffiti on my wall” can’t be tagged as “dislike graffiti” as they didn’t specify where they liked it or not). However, given AI is a logic system, this doesn’t happen too often.

Once we are happy with the tagging/coding, we can then output the tags/codes in a format that loads into our statistical analysis software (SPSS) so we can compare the findings across other research data in the survey (e.g. demographics or location) and identify statistically significant insights.

Use of RelevanceAI in projectsWhile I don’t have any that are publicly available, I can share that we now use it for every project that has open-ended questions. We can load in the questions, run the tags and then output the codes in a quarter of the time, which frees us up to spend more time on strategically analysing the findings. We start off with an Excel file with a list of comments, and end up with a page in our report with a nice chart showing the % of comments falling under each item, followed by some written insights as to what people were saying under these key themes, and include some quotes from those key themes. Essentially the outputs are the same as we have always done, the process to get there is just a lot faster (yet still with quality and accuracy).

And why not ChatGPT?I guess technically you could use ChatGPT to paste in the comments and ask for a summary, however it would be a far more convoluted process with mixed results. You would also run the risk of data/privacy breach as ChatGPT does not necessarily adhere to the Australian Privacy Principles in the same way as an Australian-based company would.

ASDF specialises in providing top quality social research and evaluation services in the fields of local government, sustainability, transport and not-for-profit. To find out more about ASDF Research, visit https://asdfresearch.com.au/